Introduction to MEV

Maximum Extractable Value, or MEV, has been all the rage in crypto. MEV refers to the extraction of value from users by reordering, inserting, and censoring transactions within blocks, as well as the profits from benign MEV such as arbitrage, liquidations etc. It was first mentioned back in 2014 on the Ethereum subreddit, by /u/pmcgoohan.

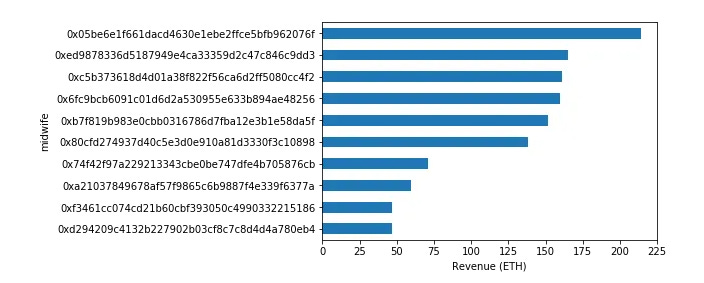

One of the earliest examples of quantifiable MEV was during the CryptoKitties craze. Specifically, the concept of completing pregnancies of CryptoKitties (giving birth to a new cat), wherein anyone could finalize the pregnancy via calling giveBirth() to the smart contract after the cat’s birthing block. By doing so, you could receive a reward in Eth. This was possible to front-run, resulting in profit (MEV). As Markus Koch pointed out back in 2018 (great read btw) – Early in the history of the game, accounts associated with a few people were the only ones giving birth to kitties. As time went by, other accounts began calling the giveBirth() method, seeing the profit freely available. It was also possible to see that only a few midwives (cat birth callers) accounted for most of the births.

It was also possible to see that the midwives were competing via gas fees (since despite being relatively even in code efficiency, some were ostensibly more successful and profitable than others in achieving the giveBirth call). The conclusion made, back then, was that the birthing fee made CryptoKitties more expensive to be played than if the mechanism wasn’t necessary. This is an example of where MEV equals poor transaction execution for the end-user, as it spiked gas fees during the births.

Another example is that of front-running on Bancor, back in mid-late 2017. The possibility of this was pointed out in June 2017 by Phil Daian (Flashbot co-founder) and Emin (Avax founder). They showed that miners would be able to front-run any transactions on Bancor, since miners were free to re-order transactions within a block they mined. Ivan Bogatyy took this one step further in August of the same year, and built a program that could monitor and execute front-running opportunities on Bancor as a non-miner (just a searcher!) — since blockchains, and their mempools are public. It’s a great read if you want to get a view into the world of MEV in the earlier days (Link).

Since then MEV has evolved rapidly and with increased on-chain economic activity from 2019 and onwards with the advent of DeFi, it has become a critical component of protocol design. With the rise of L2’s, bridges, app-chains and so forth it is interesting to look into the potential implications the “modular” design space might have on MEV. But first, we must give an overview of the actors within MEV as it stands.

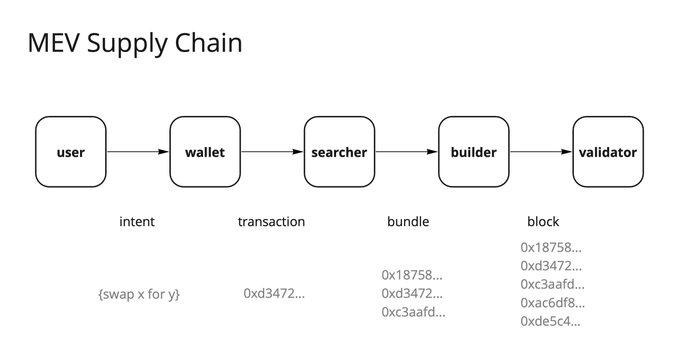

There are various actors within the MEV ecosystem. Searchers are usually the most profitable MEV participants (from a first principle perspective, although more “general” value ends up with builders and validators as they congregate value from many searchers), but there are other participants as well. Let's explain who they're first. What's also important to note is that part of the participants collude more than others do, as a result of protocol design. The participants (bar users and wallets) are searchers (S), builders (B) and validators (V). In the MEV supply chain, they have the following role to play:

Searcher: try to find all extractable value on-chain through different methods. Searchers work with builders, as searchers are willing to pay high gas fees in order to have their transactions included. This is important because many of the things we take for granted, like arbitrage and liquidations, are performed by searchers. Searchers bundle transactions together and give them to builders.

Builder: Takes the bundled transactions that are grouped together and put them into blocks for proposers. In addition to the searcher’s transaction(s), several bundles can be grouped together for a block, and can also potentially contain other users’ pending transactions from the mempool, and bundles can target specific blocks for inclusion as well.

Validator: Validators perform consensus roles to validate the blocks. They sell blockspace to the profit-seeking searchers and builders and are in turn rewarded with part of the spoils. Keep in mind that the validators get both rewards from issuance (Proposer, attestor, sync) and execution rewards (MEV+Tips).

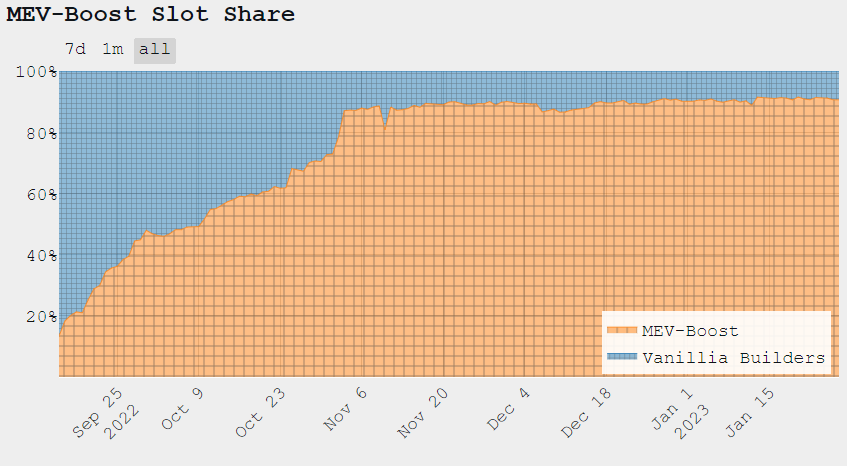

So why do some say that MEV is bad when it seems on the surface to provide value to the ecosystems and the security budget? MEV gets competed for via Priority Gas Auctions (PGA)s. PGAs are auctions where fees are competitively bid up to obtain priority ordering for transactions. Fee estimators used PGA-inflated gas prices as a reference, causing users to overpay for their transactions. What should be noted, is that this was primarily the way it worked prior to MEV-Geth/Boost and Flashbots. After the release of MEV-Geth, much of the computation related to MEV was taken off-chain instead, by a permissionless side-relay that allows MEV searchers to communicate directly with nodes and other participants. Leading to a decrease in fee congestion pricing. This is also easier to see when you look at the share of vanilla builders versus MEV-Boost.

At its core, the issue stems from the fact that users and bots are in the same pool, independent of whether they go after MEV or not.

-

There is a huge incentive for participants to extort users for profit.

-

There is a huge incentive for participants to centralize (move closer to validators/builders)

-

Extortion can be made credible via centralization of coordinators (whoever executes the mechanism of the shared-layer that rollups offload tasks to such as ordering, settlement, security, liquidity, messaging and so on) despite the system being permissionless.

Thus, extortion can and will happen (through centralization).

There's also an increasingly high barrier to entry to participate in MEV, leading to further centralization. This is partly because of the fact of the constant upkeep of MEV bots that searchers have to do. The competition is rough, and the code is constantly updated. If you find an edge, you’re much more likely to not share it. Although, as a result of blockchains being open ledgers, they will eventually be found. The MEV “marketplaces”, like Flashbots, are also quite centralized, since the vast majority of blocks flow through Flashbots (70%!), although MEV-Boost relays are permissionless, and have several endpoints (11 according to Flashbots).

Important to note that there are plans to decentralize the entire MEV supply chain, and we believe that the Flashbots team is well aware of the nuances of the current implementation. The centralized Flashbots relay does not directly own any validator power. Neither can Flashbots censor non-MEV-related transactions. Validators can still include transactions that did not go through the Flashbots relay. Flashbots is clearly a centralizing force, but the alternative of a non-Flashbots world certainly feels like it would be worse. As participants in MEV-Boost make a larger income on average than non-participants, it leads to further centralization in actors utilizing it as well.

Fear not, though, “we’re going to decentralize!” as the age-old answer often is. Although, if Flashbots can’t come to a consensus on the ethics of MEV (as alluded to during a recent podcast w/ Phil Daian), how will the wider community agree on it?

If Flashbots as a system eventually becomes a DAO, it could possibly become decentralized itself. This is a similar analogy to Lido and others here. To off-load claims of centralization, many protocols say it will become a decentralized DAO (eventually), just like Flashbots. You could argue that it is merely altering the centralization into more layers, such that the centralization is harder to see. However, there’s some limited merit in adding more layers. At the end of the day, in most cases, some decentralization is better than none. Although, this depends on how attackable that decentralization is.

So why is it so vital for actors to participate?

Validators want to collect the most amount in fees/MEV in order to offer the highest staking rate on their user’s Eth. This is an incentive for validators to pick the highest-value block created by builders.

Builders will aggregate transactions from users, MEV searchers and their own private order flow to make the highest value blocks possible:

-

Builders with exclusive private order flow should mainly be able to create the most valuable block.

-

A builder having its blocks included consistently incentivizes more private order flow to arrive at this builder since users and searchers want their transactions included swiftly.

-

Selling future block space upfront so market makers or protocols can secure block space for their transactions or users

MEV block production and transaction inclusion dynamics in essence create an unfair market. The market structure cannot be improved without direct intervention. The protocol as such must control the market, or have mechanics built out to support it (as we’ve seen). Cooperation between proposers (who have all the power in the market) seems much more worrying, especially in a semi-permissionless half-decentralized sequencer setup. However, if slashing takes place in the advent of any extraction of MEV, what is the reason to become a sequencer apart from transaction fees?

This is especially true if you don't emission tokens for security, as you derive security from a base layer. Thus, it leads to the belief that an SBV (Searcher-Builder-Validator/Sequencer) supply chain must be in place. That could lead to trusted social contracts between actors as well. MEV could also in reality become a “problem” aggressed more so on the application side of things, leaving the protocol without direct interference. But in some cases, and we will make that case, MEV can be seen as increasing the security budget of the underlying protocol.

In competitive block markets, where there’s competition for blockspace applications will eventually revert to “MEV” (as in priority gas fees)—these transactions never revert. Builders don’t waste blockspace on unprofitable reversions. MEV transactions confirm quicker. Searchers convert that MEV into priority fees, giving the transaction higher priority. Applications can as such incorporate MEV into their designs, and use it to buy privileged positions in the blockspace market within a specific protocol.

By enabling searchers and hunters to easily look for good predictable state changes resulting in MEV (so a marketplace) you can incentivize good MEV adding to the security and efficiency of protocols. Coupling these profitable actions with the sequencer itself, whom as a result of bonding tokens to become one (maybe with the added possibility of delegation from other users to gain a fraction of the profits) could share in that profit. This would allow the value chain to both land in the hands of users and sequencers, while adding to the security budget of the protocol itself. So the user shares in the value they themselves create. You can only scale a blockchain's decentralization with its success and adoption (matureness).

MEV; good, bad or just existent?

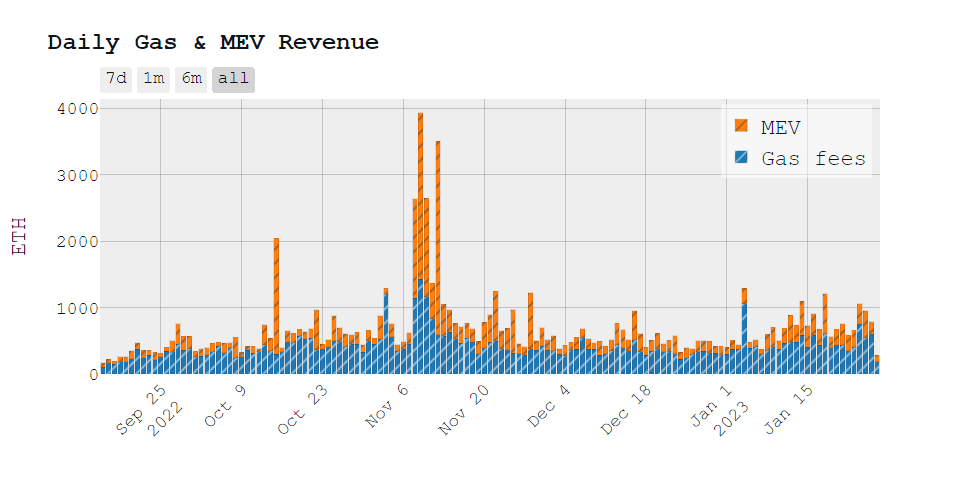

A way to reason about the role of MEV is by looking at it through the lens of the economic security of a chain. In general, this is seen as the value at stake to “protect” that chain, the amount of assets staked or the amount of money needed to perform a 51% attack in the case of Bitcoin. MEV is a critical component of this, as it enhances the yield for validators/miners and therefore allows greater economic value to be put up for stake.

So there are basically two lines of reasoning here. Either MEV is seen as bad; this follows the reasoning that value being (unknowingly) extracted from the user is bad for UX and sophisticated users extract this from less sophisticated users. As a result of this, a myriad of solutions to prevent MEV has popped up, things like threshold cryptography to encrypt TX’s pre-ordering and batch auctions with a uniform execution price are used to prevent or minimize MEV extraction.

Another way to look at this is that MEV is actually a force of good. The reasoning here is that MEV extraction enhances the (potential) value of the blockspace being sold by validators/miners, and therefore allows for more yield towards those. As a result of yields being higher, it stands to reason that validators/miners are willing to put up more assets in order to get access to these yields. This will result in the chain having more economic security. Another way of achieving this economic security is by artificially enhancing the yield, by issuing more native tokens/rewards. However, this comes at the cost of token holders as they face inflation on their assets. If you view MEV through this point of view, it's actually a force of good for token holders, as it allows them to benefit from economic security while facing less inflation.

It boils down to where do you want the pain to land. By allowing MEV to be extracted, you limit the inflation required to reach a certain level of economic security, at the cost of user experience. Issuance (inflation) thus becomes a needed utility to reach certain adoption metrics, which eventually will allow you to lower it to insignificant amounts, while MEV can contribute to the security budget (and as a such the decentralization of the protocol itself.

However, by preventing or minimizing MEV (if even possible) you increase the general user experience at the expense of token holders, as you need more inflation to maintain economic security. Another, perhaps more farfetched, reasoning behind the level of MEV extraction can be that for an entirely rational actor, extracting the maximum amount might not be the most optimal. Since this will be such a burden on users that they will stop being actively economically on-chain, decreasing the overall amount to be extracted from them over the entire lifespan of a user.

And while we view MEV through the lens of the base layer in the above section, the same argument can be made more on an application level (although our belief is these will intertwine more with app-chains). Research from Mekatek shows that on a DEX level, you either allow for arbitrage and benefit LPs, or don’t allow for it and compensate LPs with inflation at the expense of token holders. This is a similar dynamic as we have just presented on an infrastructure protocol level.

So is MEV good or bad? The fairest answer we can give is, it's both and neither. Some forms of MEV can be seen as bad from the point of view of one, but as rewarding from the point of view of another. Overall, we are of the belief that MEV is neither good nor bad, it just is.

Decentralizing Sequencers

The topic of how much value to be optimally extracted becomes particularly important when you look at MEV on layer 2’s. This is because, as it currently stands, most sequencers are centralized. They are entirely in charge of ordering in a block, and consequently can determine how much to extract. However, as like any point of centralization in crypto, the goal is to move to decentralized sequencer at which point you run into the same incentivization game theory of a PoS L1. The economics behind a sequencer can be shown as;

yield = issuance (inflation) + sum of fees collected - cost of DA & dispute resolution(Keep in mind there’s overhead to count for as well in regard to the cost of running hardware)

By preventing or limiting MEV to be extracted from an L2, you decrease the sum of fees collected and therefore make it less profitable to run a sequencer, or participate in a decentralized sequencer network. Furthermore, there are various considerations that you need to consider when decentralizing a sequencer set.

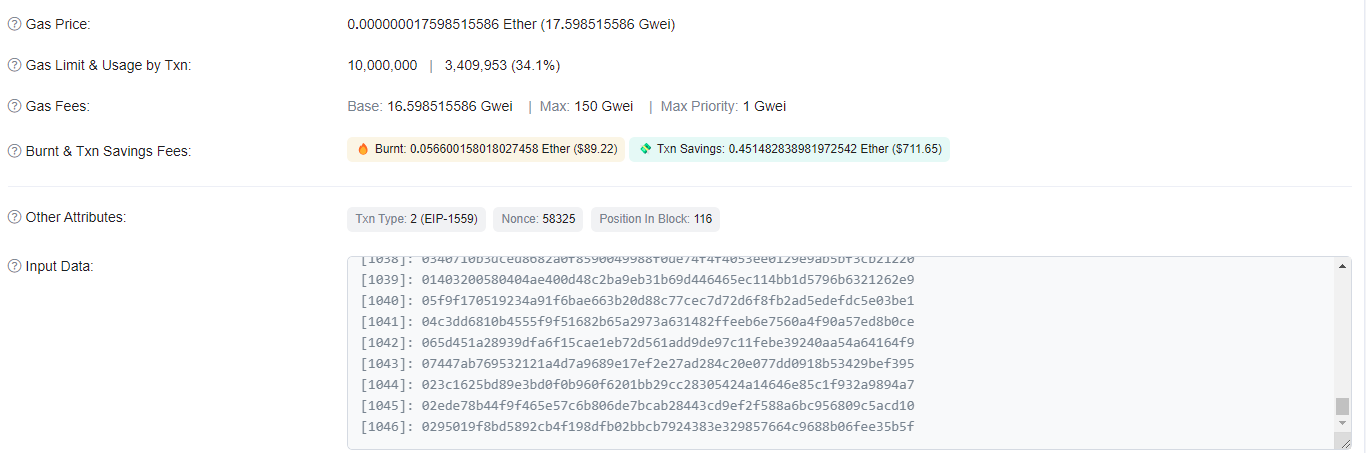

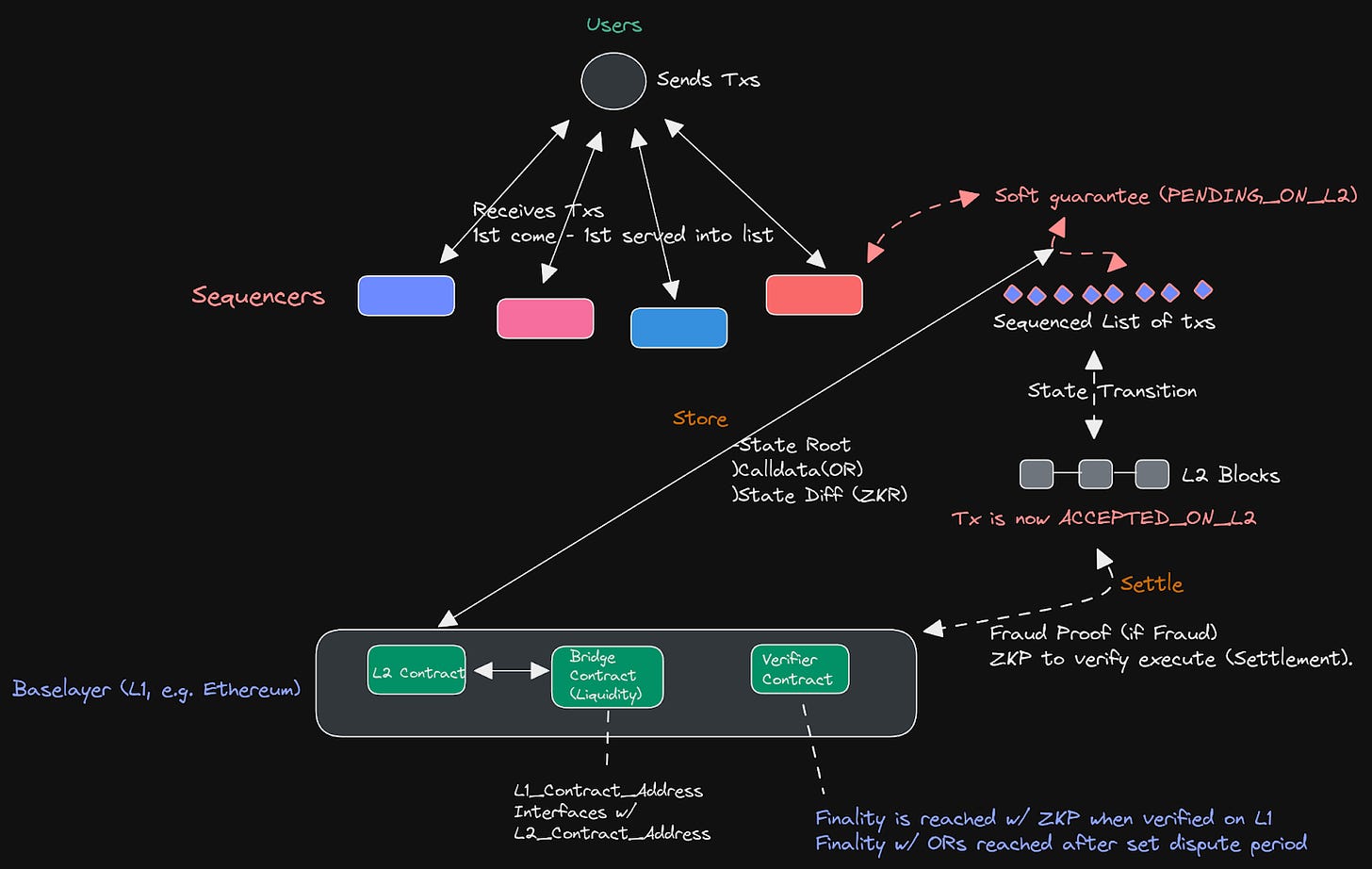

The reason why decentralizing sequencers is needed is clear—it decentralizes and removes trust assumptions from the centralized teams building the rollups. Currently, the way rollup sequencers function is that there exists a single sequencer (run by the team) that executes, batches and finalizes all transactions. This is obviously not ideal in the situation of a sequencer failure (although exit withdrawals can help here). Furthermore, it is also putting massive trust assumptions on the team that controls both the sequencer and the multi-sig smart contracts that allow for upgradeability, and fault proofs in the case of fraud. They also have the ability to reorder transactions and extract MEV. Although, the way transactions are currently finalized is under the assumption that the sequencers will not touch, extract or extort any MEV or transactions, which is what gives us the soft finality that allows sequencers to finalize transactions as soon as they’re received. This is what is referred to as soft finality, in some cases actual finality won’t be reached until up to 10 hours later, in the case of ZK rollups. A great example of this can be found if you look at the SHARP stark verifier contract from Starkware on Ethereum—which is where the ZK proof (that is recursively proven from a myriad of transactions) is finalized. This is usually done every 3–10 hours, which is quite the dispute time from when the first transaction in the batch was handled.

This is done to lower the cost of transactions and put as many transactions in a single proof as possible, otherwise, the cost of transactions would skyrocket. Such is the way transactions are finalized on rollups - they receive a soft finality guarantee, that eventually they will be finalized on the underlying layer (in this case Ethereum). In the case of Optimistic rollups transactions are instead batched up and the state root (same as ZKRs) alongside the calldata (transaction state, this is just state diff with ZKRs) is sent to the L2 contract on Ethereum. Because of dispute period requirements (the ability to roll back a fraudulent transaction) actual finality of transactions on Optimistic Rollups doesn’t happen until around 7 days later (agreed on number). This means their actual soft finality guarantee last quite some time to allow for watchers to prove any fraud that has happened. These are all issues that need to be addressed when we decentralize the sequencer set; soft finality needs to be guaranteed, and eventual finality needs to be reached while allowing for the proofs (validity or fraud) to function as intended.

Underneath is a picture of the way rollups function currently:

Keep in mind that L2 finality is not finality when you depend on the L1 for consensus. This is partly the trade-off you make when you move the consensus of the chain from the rollup to the underlying “settlement” layer. Unless you handle consensus on the rollup as well (which would lose the derived security, but could gain sovereignty and utilize a DA layer for data availability guarantees). However, the obvious tradeoff here is the fact that you fragment liquidity and increase the difficulty of interoperability.

Next off, let’s explore some possibilities for decentralizing the sequencers of rollup, and what kind of implications this can have for MEV.

First off, to decentralize the sequencer set means to remove the trust assumption that we have in a singular one that is run by a team that has incentives to not touch MEV nor reorder transactions. This gives a certain “protection” against MEV. However, if you move to decentralize the sequencer set, possibly through the bonding of tokens. You open up the possibility for a permissionless or semi-permissioned set of validators/sequencers to extract value, since they have committed tokens of the rollup (such as with the Fuel token model). This means there needs to be a mechanism to ensure block ordering correctness and slashing properties in place. The reason for this is to ensure that the sequencer set keeps liveness, stays fair and doesn’t infer on transactions. Such as with reordering and placing their own transactions first in the pool—even if they paid less in gas fess, this usually warrants a public mempool, or guarantees of inclusion.

Underneath are some examples of how this could look:

-

Proof-of-Stake with Leader Election. This would likely be with Tendermint’s leader election or akin (since we derive consensus from the base layer) alongside a slashing module (like the Cosmos SDK one), or something similar. This is at least the option that most rollups that I’ve talked to seems to be going down. However, there are other options as well. With PoS, if you allow the largest holder to get the most % of blocks, it will inevitably also lead to centralization. Or also revert to a MEV auction, since you bid with the opportunity cost instead of outright payments.

-

Fair Ordering Sequencing (Arbitrum have in particular looked at this). For this to work properly, you’d also need a bond that is slashable (which could be the same for everyone, to keep the essence of fairness). In this setup, you “pay” [opportunity cost per block * blocks staked] for the right to extract MEV over that period. For fair ordering to work, you’d need a fair ordering protocol that picks a fair sequence of events to eventually publish. This will usually come through honest majority assumptions, such as in the form of first serve ordering with a merged transaction list from the various sequencers. The system is, as such, first come-first serve with a 51%-of-N honesty assumption. In the case of dishonesty, the system could commit to a social fork (if they have sovereignty).

The problem in particular with the second option is the fact that it becomes a latency race to be included first, which will most likely lead to builders and searchers moving as close as humanly possible to the sequencers in question (much like what we’ve seen in Trad Fi, and even in the crypto world as well by optimizing network latency). This obviously causes geographical centralization.

There have also been some discussions surrounding MEV auctions (bidding for the right to extract MEV and propose a block) for sequencing slots (particularly from the Optimism camp). However, we feel that it pushes towards centralization despite its permissionless nature, and it has a very high barrier to entry. Although some of the aforementioned methods also lead to “centralization”, it’s way less obvious and harmful to the protocol.

Generally, the fair ordering mechanisms aren’t able to “prevent” or mitigate MEV in any way that doesn’t add considerable negative trade-offs. However, things like threshold encryption, time lock puzzles, batch auctions and meta mempools (unbundling the mempool and block builder role with a shared aggregation/sequencer layer—which could become useful in the area of cross-domain MEV, which we’ll also touch upon later) might have more success in providing such properties.

Now that we’ve seen how sequencers currently work and some methods for possibly decentralizing them—let’s try to picture how a decentralized sequencer set looks, and what it needs to keep finality guarantees and make sure the protocol functions as intended.

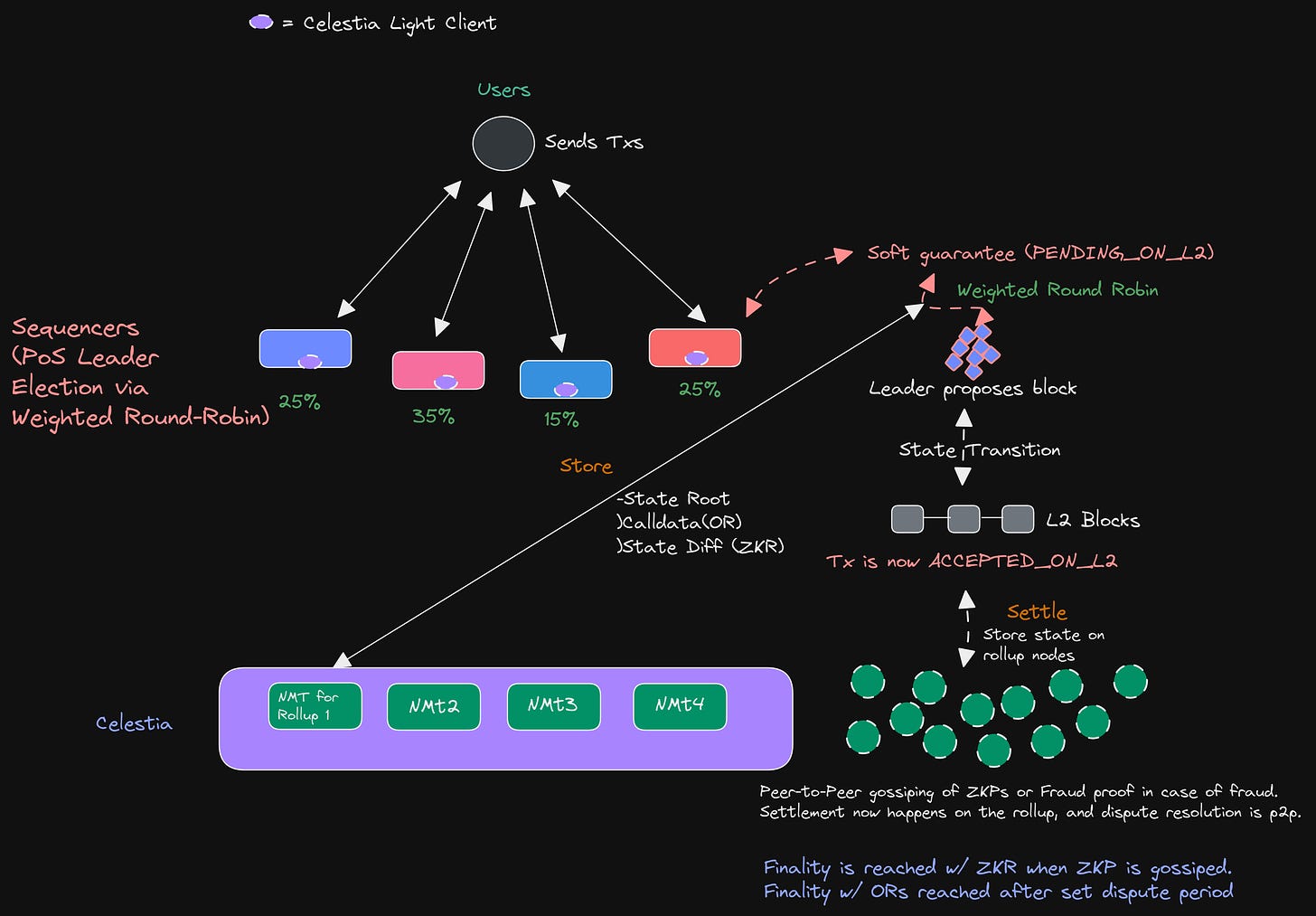

On top is a sequencer setup in a smart contract rollup, underneath we’ll showcase how this would look with a sovereign rollup (rollup on top of a DA layer) via PoS leader election. In the fair sequencing setup, there isn’t much incentive to buy and stake the required token amount to become a sequencer, and the total cryptoeconomic security of the sequencer set, stays rather low.

In this setup, the way it works is quite simple—When choosing the sequencer for a certain round, a committee simply utilizes a weighted round-robin method based on stake weight. With this method, any validator can easily calculate the eligible proposer for a given round. If you want to read about NMTs on Celestia, check out our data structures article here. (Keep in mind that the dispute window could potentially be lowered in this setup, as you’re not in the extremely competitive blockspace of Ethereum).

There’s also been some research and discussion (2017 on Tendermint repo) done into randomizing leader election as well, which could also be a method to get a similar setup to fair ordering, but with a random proposer (leader) instead of first-come, first-serve. Although, this disincentivizes sequencers to buy and stake more tokens (which weighted round-robin incentivizes), which could lower the economic security of the protocol.

MEV extraction differs between the two different setups, and can result in MEV being distributed and extracted differently. There are other concerns to keep in mind, such as finality guarantees, disincentivisation methods for MEV, which we’ll cover in a second part alongside the various actor that populates the space in modular MEV, and how collusion can happen. We’ll also look at shared sequencers and meta mempool setups—so look out for another part in the coming weeks!